Router and Filter transformation are similar in behavior but router has some additional features. Informatica added Router

transformation version 5.x onward. Lets first talk about Filter transformation

----------------------

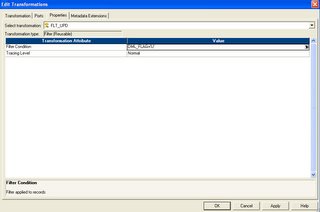

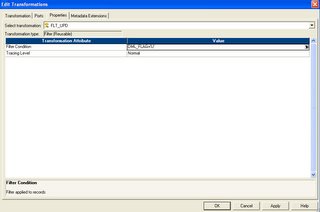

1. Filter transformation is an active transformation

2. The Filter transformation allows you to filter rows in a mapping.

3. All the rows which meet the filter condition pass through the transformation.

4. It has only two type of ports, input and output.

5. Use filter transformation as close to source as possible for better performance. This make sense, beacuse number of rows to go through other transformation will be less.

6. Can not merge rows from different transformation in Filter transformation.

7. Expression can be used in the filter condition.

Router

-------

A Router transformation is similar to a Filter transformation because both transformations allow you to use a condition to test data. Router is enhancement of Filter transformation. Developer now prefer to use Router transformation rather than filter. In filter transformation, you can test data for only one condition where as in Router transformation you can test data for more than one condition. The same problem can be solved using Filter and Router transformation. But we have to use multiple filter transformation for each test condition. But with Router you can give different test condition in same transformation and informatica will be create different group for each test condition.

1. Router is also active transformation

2. Only two types of port , Input and Output

3. One default group. If row does not satisfy any test condition, it will fall into default group.

4. Mulitple test data condition can be created as output group.